Python is a great development language. But it lacks of a proper distribution and packaging support if you want end user get your application. Pypi and wheel packages are more intendend for developers to install their own apps dependencies, but and end user will feel as painful to use pip and virtualenv to install and run a python application.

There are some projects trying to solve that problem as PyInstaller, py2exe or cx_Freeze. I maintain vdist, that its closely related with this problem and tries to solve it creating debian, rpm and archlinux packages from python applications. In this article I'm going to analyze PyOxidizer. This tool is written in a language I'm really loving (Rust), and follows and approach somewhat similar to vdist as it bundles your application along a python distribution but besides compiles the entire bundle into an executable binary.

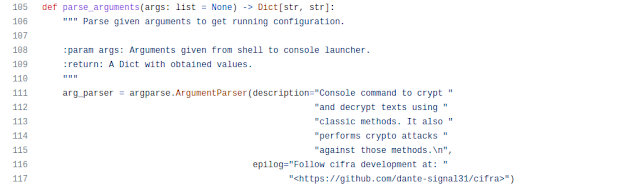

To structure this tutorial, I'm going to build and compile my Cifra project. You can clone it at this point to follow this tutorial step by step.

First thing to be aware is that PyOxidizer bundles your application with a customized Python 3.8 or 3.9 distribution, so your app should be compatible with one of those. You will need a C compiler/toolchain to build with PyOxidizer, If you don't have one PyOxidizer outputs instructions to install one. PyOxidizer uses Rust toolchain too, but it downloads it in the background for you so it's not a dependency you should worry about.

PyOxidizer installation

You have some ways to install PyOxidizer (downloading a compiled release from its GitHub Page, compiling from source) but I feel straighter and cleaner to install into your project's virtualenv using pip.

Guess you have Cifra project cloned (in my case at path ~/Project/cifra), inside it you created a virtualenv inside a venv folder and you activated that virtualenv, then you can install PyOxidizer inside that virtualenv doing:

(venv)dante@Camelot:~/Projects/cifra$ pip install pyoxidizer

Collecting pyoxidizer

Downloading pyoxidizer-0.17.0-py3-none-manylinux2010_x86_64.whl (9.9 MB)

|████████████████████████████████| 9.9 MB 11.5 MB/s

Installing collected packages: pyoxidizer

Successfully installed pyoxidizer-0.17.0

(venv)dante@Camelot:~/Projects/cifra$

You can check you have pyoxidizer properly installed doing:

(venv)dante@Camelot:~/Projects/cifra$ pyoxidizer --help

PyOxidizer 0.17.0

Gregory Szorc <gregory.szorc@gmail.com>

Build and distribute Python applications

USAGE:

pyoxidizer [FLAGS] [SUBCOMMAND]

FLAGS:

-h, --help

Prints help information

--system-rust

Use a system install of Rust instead of a self-managed Rust installation

-V, --version

Prints version information

--verbose

Enable verbose output

SUBCOMMANDS:

add Add PyOxidizer to an existing Rust project. (EXPERIMENTAL)

analyze Analyze a built binary

build Build a PyOxidizer enabled project

cache-clear Clear PyOxidizer's user-specific cache

find-resources Find resources in a file or directory

help Prints this message or the help of the given subcommand(s)

init-config-file Create a new PyOxidizer configuration file.

init-rust-project Create a new Rust project embedding a Python interpreter

list-targets List targets available to resolve in a configuration file

python-distribution-extract Extract a Python distribution archive to a directory

python-distribution-info Show information about a Python distribution archive

python-distribution-licenses Show licenses for a given Python distribution

run Run a target in a PyOxidizer configuration file

run-build-script Run functionality that a build script would perform

(venv)dante@Camelot:~/Projects/cifra$

PyOxidizer configuration

Now create an initial PyOxidizer configuration file at your project root folder:

(venv)dante@Camelot:~/Projects/cifra$ cd ..

(venv) dante@Camelot:~/Projects$ pyoxidizer init-config-file cifra

writing cifra/pyoxidizer.bzl

A new PyOxidizer configuration file has been created.

This configuration file can be used by various `pyoxidizer`

commands

For example, to build and run the default Python application:

$ cd cifra

$ pyoxidizer run

The default configuration is to invoke a Python REPL. You can

edit the configuration file to change behavior.

(venv)dante@Camelot:~/Projects$

Generated pyoxidizer.bzl is written in Starlark, a python dialect for configuration files, so we'll feel at home there. Nevertheless, that configuration file is rather long and although it is fully commented at first it is not clear how to align all the moving parts. PyOxidizer is pretty complete too but it can be rather overwhelming for anyone that only wants to get started. Filtering the entire PyOxidizer documentation site, I've found this section as the most helpful to customize PyOxidizer configuration file.

There are many approaches to build binaries using PyOxidizer. Let's assume you have a setuptools setup.py file for your project, then we can ask PyOxidizer run that setup.py inside it's customized python. To do that add next line before return line of pyoxidizer.bzl make_exe() function:

# Run cifra's own setup.py and include installed files in binary bundle.

exe.add_python_resources(exe.setup_py_install(package_path=CWD))

In package_path parameter you have to provide your setup.py path. I've provided a CWD argument because I'm assuming that pyoxidizer.bzl and setup.py are at the same folder.

Next thing to customize at pyoxidizer.bzl is that it defaults to build a Windows MSI executable. At Linux we want an ELF executable output. So, first comment line near the end that registers a target to build a msi installer:

#register_target("msi_installer", make_msi, depends=["exe"])We need also an entry point for our application. It would be nice if PyOxidicer would take setup.py entry_points parameter configuration but it doesn't. Instead we have to provide it manually through pyoxidizer.bzl configuration file. In our example just find the line at make_exe() function where python_config variable is created and place after:

python_config.run_command = "from cifra.cifra_launcher import main; main()"Building executable binaries

Just now, you can run "pyoxidizer build" and pyoxidizer will begin to bundle our application.

But Cifra has a very specific problem at this point. If you try to run build over cifra with configuration so far, you will get next error:

(venv)dante@Camelot:~/Projects/cifra$ pyoxidizer build

[...]

error[PYOXIDIZER_PYTHON_EXECUTABLE]: adding PythonExtensionModule<name=sqlalchemy.cimmutabledict>

Caused by:

extension module sqlalchemy.cimmutabledict cannot be loaded from memory but memory loading required

--> ./pyoxidizer.bzl:272:5

|

272 | exe.add_python_resources(exe.setup_py_install(package_path=CWD))

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ add_python_resources()

error: adding PythonExtensionModule<name=sqlalchemy.cimmutabledict>

Caused by:

extension module sqlalchemy.cimmutabledict cannot be loaded from memory but memory loading required

(venv)dante@Camelot:~/Projects$

PyOxidizer tries to embed every dependency of your application inside produced binary (in-memory mode). This is nice because you end with an unique distributable binary file and performance to load those dependencies is improved. Problem is that not every package out there admits to be embedded that way. Here SQLAlchemy fails to be embedded.

In that case, those dependencies should be stored next to produced binary (filesystem-relative mode). PyOxidizer will link those dependencies inside binary using their places relative to produced binary, so when distributing we must pack produced binary and its dependencies files keeping their relative places.

One way to deal with this problem is asking PyOxidizer to keep things in-memory whenever it can and fallback to filesystem-relative when not. To do that uncomment next two lines from make_exe() function at configuration file:

# Use in-memory location for adding resources by default.

policy.resources_location = "in-memory"

# Use filesystem-relative location for adding resources by default.

# policy.resources_location = "filesystem-relative:prefix"

# Attempt to add resources relative to the built binary when

# `resources_location` fails.

policy.resources_location_fallback = "filesystem-relative:prefix"

Doing this you may make things work in your application, but for Cifra things keep failing despite build go further:

(venv)dante@Camelot:~/Projects/cifra$ pyoxidizer build

[...]

adding extra file prefix/sqlalchemy/cresultproxy.cpython-39-x86_64-linux-gnu.so to .

installing files to /home/dante/Projects/cifra/./build/x86_64-unknown-linux-gnu/debug/install

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "cifra.cifra_launcher", line 31, in <module>

File "cifra.attack.vigenere", line 21, in <module>

File "cifra.tests.test_simple_attacks", line 2, in <module>

File "pytest", line 5, in <module>

File "_pytest.assertion", line 9, in <module>

File "_pytest.assertion.rewrite", line 34, in <module>

File "_pytest.assertion.util", line 13, in <module>

File "_pytest._code", line 2, in <module>

File "_pytest._code.code", line 1223, in <module>

AttributeError: module 'pluggy' has no attribute '__file__'

error: cargo run failed

(venv)dante@Camelot:~/Projects$

This error seems somewhat related to this PyOxidizer nuance.

To deal with this problem I must keep everything filesystem related:

# Use in-memory location for adding resources by default.

# policy.resources_location = "in-memory"

# Use filesystem-relative location for adding resources by default.

policy.resources_location = "filesystem-relative:prefix"

# Attempt to add resources relative to the built binary when

# `resources_location` fails.

# policy.resources_location_fallback = "filesystem-relative:prefix"

Now build go smoothly:

(venv)dante@Camelot:~/Projects/cifra$ pyoxidizer build

[...]

installing files to /home/dante/Projects/cifra/./build/x86_64-unknown-linux-gnu/debug/install

(venv)dante@Camelot:~/Projects/cifra$ls build/x86_64-unknown-linux-gnu/debug/install/

cifra prefix

(venv)dante@Camelot:~/Projects$

As you can see, PyOxidizer generated an ELF binary for our application and stored all of its dependencies in prefix folder:

(venv)dante@Camelot:~/Projects/cifra$ ls build/x86_64-unknown-linux-gnu/debug/install/prefix

abc.py concurrent __future__.py _markupbase.py pty.py sndhdr.py tokenize.py

aifc.py config-3 genericpath.py mimetypes.py py socket.py token.py

_aix_support.py configparser.py getopt.py modulefinder.py _py_abc.py socketserver.py toml

antigravity.py contextlib.py getpass.py multiprocessing __pycache__ sqlalchemy traceback.py

argparse.py contextvars.py gettext.py netrc.py pyclbr.py sqlite3 tracemalloc.py

ast.py copy.py glob.py nntplib.py py_compile.py sre_compile.py trace.py

asynchat.py copyreg.py graphlib.py ntpath.py _pydecimal.py sre_constants.py tty.py

asyncio cProfile.py greenlet nturl2path.py pydoc_data sre_parse.py turtledemo

asyncore.py crypt.py gzip.py numbers.py pydoc.py ssl.py turtle.py

attr csv.py hashlib.py opcode.py _pyio.py statistics.py types.py

base64.py ctypes heapq.py operator.py pyparsing stat.py typing.py

bdb.py curses hmac.py optparse.py _pytest stringprep.py unittest

binhex.py dataclasses.py html os.py pytest string.py urllib

bisect.py datetime.py http _osx_support.py queue.py _strptime.py uuid.py

_bootlocale.py dbm idlelib packaging quopri.py struct.py uu.py

_bootsubprocess.py decimal.py imaplib.py pathlib.py random.py subprocess.py venv

bz2.py difflib.py imghdr.py pdb.py reprlib.py sunau.py warnings.py

calendar.py dis.py importlib __phello__ re.py symbol.py wave.py

cgi.py distutils imp.py pickle.py rlcompleter.py symtable.py weakref.py

cgitb.py _distutils_hack iniconfig pickletools.py runpy.py _sysconfigdata__linux_x86_64-linux-gnu.py _weakrefset.py

chunk.py doctest.py inspect.py pip sched.py sysconfig.py webbrowser.py

cifra email io.py pipes.py secrets.py tabnanny.py wsgiref

cmd.py encodings ipaddress.py pkg_resources selectors.py tarfile.py xdrlib.py

codecs.py ensurepip json pkgutil.py setuptools telnetlib.py xml

codeop.py enum.py keyword.py platform.py shelve.py tempfile.py xmlrpc

code.py filecmp.py lib2to3 plistlib.py shlex.py test_common zipapp.py

collections fileinput.py linecache.py pluggy shutil.py textwrap.py zipfile.py

_collections_abc.py fnmatch.py locale.py poplib.py signal.py this.py zipimport.py

colorsys.py formatter.py logging posixpath.py _sitebuiltins.py _threading_local.py zoneinfo

_compat_pickle.py fractions.py lzma.py pprint.py site.py threading.py

compileall.py ftplib.py mailbox.py profile.py smtpd.py timeit.py

_compression.py functools.py mailcap.py pstats.py smtplib.py tkinter

(venv)dante@Camelot:~/Projects$

If you want to name that folder with a more self-explanatory name, just change "prefix" for whatever you want in configuration file. For instance, to name it "lib":

# Use in-memory location for adding resources by default.

# policy.resources_location = "in-memory"

# Use filesystem-relative location for adding resources by default.

policy.resources_location = "filesystem-relative:lib"

# Attempt to add resources relative to the built binary when

# `resources_location` fails.

# policy.resources_location_fallback = "filesystem-relative:prefix"

Let's see how our dependencies folder changed:

(venv)dante@Camelot:~/Projects/cifra$ pyoxidizer build

[...]

installing files to /home/dante/Projects/cifra/./build/x86_64-unknown-linux-gnu/debug/install

(venv)dante@Camelot:~/Projects/cifra$ls build/x86_64-unknown-linux-gnu/debug/install/

cifra lib

(venv)dante@Camelot:~/Projects$

Building for many distributions

So far, you have get a binary that can be run in any Linux distribution like the one you used to build that binary. Problem is that our binary can fail if its run in other distributions:

(venv)dante@Camelot:~/Projects/cifra$ ls build/x86_64-unknown-linux-gnu/debug/install/

cifra lib

(venv) dante@Camelot:~/Projects/cifra$ docker run -d -ti -v $(pwd)/build/x86_64-unknown-linux-gnu/debug/install/:/work ubuntu

36ad7e78c00f90172bd664f9a045f14acc2138b2ee5b8ac38e7158aa145d4965

(venv) dante@Camelot:~/Projects/cifra$ docker attach 36ad

root@36ad7e78c00f:/# ls /work

cifra lib

root@36ad7e78c00f:/# /work/cifra --help

usage: cifra [-h] {dictionary,cipher,decipher,attack} ...

Console command to crypt and decrypt texts using classic methods. It also performs crypto attacks against those methods.

positional arguments:

{dictionary,cipher,decipher,attack}

Available modes

dictionary Manage dictionaries to perform crypto attacks.

cipher Cipher a text using a key.

decipher Decipher a text using a key.

attack Attack a ciphered text to get its plain text

optional arguments:

-h, --help show this help message and exit

Follow cifra development at: <https://github.com/dante-signal31/cifra>

root@36ad7e78c00f:/# exit

exit

(venv)dante@Camelot:~/Projects$

Here our built binary runs in another ubuntu because my personal box (Camelot) is an ubuntu (actually Linux Mint). Our generated binary will run right in other machines with the same distribution like the one I used to build binary.

But let's see what happens if we run our binary in a different distribution:

(venv)dante@Camelot:~/Projects/cifra$ docker run -d -ti -v $(pwd)/build/x86_64-unknown-linux-gnu/debug/install/:/work centos

Unable to find image 'centos:latest' locally

latest: Pulling from library/centos

a1d0c7532777: Pull complete

Digest: sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

Status: Downloaded newer image for centos:latest

376c6f0d085d9947453a2786a9a712146c471dfbeeb4c452280bd028a5f5126f

(venv) dante@Camelot:~/Projects/cifra$ docker attach 376

[root@376c6f0d085d /]# ls /work

cifra lib

[root@376c6f0d085d /]# /work/cifra --help

/work/cifra: /lib64/libm.so.6: version `GLIBC_2.29' not found (required by /work/cifra)

[root@376c6f0d085d /]# exit

exit

(venv)dante@Camelot:~/Projects$

As you can see, our binary fails on Centos. That happens because libraries are not named the same across distributions so our compiled binary fails to load shared dependencies it needs to run.

To solve this you need to build a fully statically linked binary, so it has no external dependencies at all.

To build that kind of binaries we need to update Rust toolchain to build for that kind of targets:

(venv)dante@Camelot:~/Projects/cifra$ rustup target add x86_64-unknown-linux-musl

info: downloading component 'rust-std' for 'x86_64-unknown-linux-musl'

info: installing component 'rust-std' for 'x86_64-unknown-linux-musl'

30.4 MiB / 30.4 MiB (100 %) 13.9 MiB/s in 2s ETA: 0s

(venv)dante@Camelot:~/Projects$

To make PyOxidizer build a binary like that you are supossed to do:

(venv)dante@Camelot:~/Projects/cifra$ pyoxidizer build --target-triple x86_64-unknown-linux-musl

[...]

Processing greenlet-1.1.1.tar.gz

Writing /tmp/easy_install-futc9qp4/greenlet-1.1.1/setup.cfg

Running greenlet-1.1.1/setup.py -q bdist_egg --dist-dir /tmp/easy_install-futc9qp4/greenlet-1.1.1/egg-dist-tmp-zga041e7

no previously-included directories found matching 'docs/_build'

warning: no files found matching '*.py' under directory 'appveyor'

warning: no previously-included files matching '*.pyc' found anywhere in distribution

warning: no previously-included files matching '*.pyd' found anywhere in distribution

warning: no previously-included files matching '*.so' found anywhere in distribution

warning: no previously-included files matching '.coverage' found anywhere in distribution

error: Setup script exited with error: command 'musl-clang' failed: No such file or directory

thread 'main' panicked at 'called `Result::unwrap()` on an `Err` value: Custom { kind: Other, error: "command [\"/home/dante/.cache/pyoxidizer/python_distributions/python.70974f0c6874/python/install/bin/python3.9\", \"setup.py\", \"install\", \"--prefix\", \"/tmp/pyoxidizer-setup-py-installvKsIUS/install\", \"--no-compile\"] exited with code 1" }', pyoxidizer/src/py_packaging/packaging_tool.rs:336:38

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

(venv)dante@Camelot:~/Projects$

As you can see, I get an error that I've been unable to solve. Because of that I've filled an issue at PyOxidizer GitHub page. PyOxidizer author kindly answered my issue pointing I needed musl-clang command in my system. I've not found any package with musl-clang, so I guess it's something you have to compile from source. I've tried to google it but I haven't found a clear way to get that command up and running (I have to admit I'm not a C/C++ ninja). So, I guess I'll have to use pyoxidizer without static compiling until musl-clang dependency dissapears ot pyoxidizer documentation explains how to get that command.

Conclusion

Althought I haven't got it work to create statically linked binaries, PyOxidizer feels like a promising tool. I think I can use at vdist to speed up packaging process. As in vdist I use specific containers for every type of package inability to create statically linked binaries doesn't seem a blocker. It seems actively developed and has a lot of contributors, so it seems a good chance to help to simplify python packaging and deployment of python applications.