I love the simplicity of Godot's shaders. It's amazing how easy it is to apply many of the visual effects that bring a game to life. I've been learning some of those effects and want to practice them in a test scene that combines several of them.

The scene will depict a typical Japanese postcard in pixel art: a cherry blossom tree swaying in the wind, dropping flowers, framed by a starry night with a full moon, Mount Fuji in the background, and a stream of water in the foreground. Besides the movement of the tree, I want to implement effects like falling petals, rain, wind, cloud movement, lightning, and water effects—both in its movement and reflection. In this first article, I’ll cover how to implement the water reflection effect.

The first thing to note is that the source code for this project is in my GitHub repository. The link points to the version of the project that implements the reflection. In future projects, I will tag the commits where the code is included, and those are the commits I’ll link to in the article. As I progress with the articles, I might tweak earlier code, so it's important to download the commit I link to, ensuring you see the same code discussed in the article.

I recommend downloading the project and opening it in Godot so you can see how I’ve set up the scene. You’ll see I’ve layered 2D Sprites in the following Z-Index order:

- Starry sky

- Moon

- Mount Fuji

- Large black cloud

- Medium gray cloud

- Small cloud

- Grass

- Tree

- Water

- Grass occluder

Remember that sprites with a lower Z-Index are drawn before those with a higher one, allowing the latter to cover the former.

The grass occluder is a kind of patch I use to cover part of the water sprite so that its rectangular shape isn’t noticeable. We’ll explore why I used it later.

Water Configuration

Let's focus on the water. Its Sprite2D (Water) is just a white rectangle. I moved and resized it to occupy the entire lower third of the image. To keep the scene more organized, I placed it in its own layer (Layer 6) by setting both its "Visibility Layer" and "Light Mask" in the CanvasItem configuration.

In that same CanvasItem section, I set its Z-Index to 1000 to ensure it appears above all the other sprites in the scene, except for the occluder. This makes sense not only from an artistic point of view, as the water is in the foreground, but also because the technique we’ll explore only reflects images of objects with a Z-Index lower than the shader node's. We’ll see why in a moment.

Lastly, I assigned a ShaderMaterial based on a GDShader to the water’s "Material" property.

Let’s check out the code for that shader.

Water Reflection Shader

This shader is in the file Shaders/WaterShader.gdshader in the repository. As the scene is 2D, it's a canvas_item shader.

It provides the following parameters to the outside:

|

| Shader variables we offer to the inspector |

All of these can be set from the inspector, except for screen_texture:

|

| Shader Inspector |

Remember, as I’ve mentioned in previous articles, a uniform Sampler2D with the hint_screen_texture attribute is treated as a texture that constantly updates with the image drawn on the screen. This allows us to access the colors present on the screen and replicate them in the water reflection.

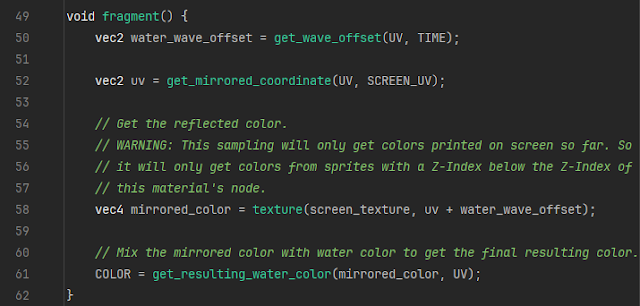

This shader doesn’t distort the image; it only manipulates the colors, so it only has a fragment() component:

|

| fragment() method |

As seen in the previous code, the shader operates in three steps.

The first step (line 33) calculates which point on the screen image is reflected at the point of water the shader is rendering.

Once we know which point to reflect, the screen image is displayed at that point to get its color (line 38).

Finally, the reflected color is blended with the desired water color to produce the final color to display when rendering. Remember, in GDShader, the color stored in the COLOR variable will be the one displayed for the pixel being rendered.

We’ve seen the second step (line 38) in other shaders in previous articles. It's basically like using the eyedropper tool in Photoshop or GIMP: it picks the color of a specific point on an image. Keep in mind that, when the shader executes, the image consists only of the sprites drawn up to that point. That is, sprites with a Z-Index lower than the one with our shader. This makes sense: you can’t sample a color from the screen that hasn’t been drawn yet. If objects are missing from the reflection, it’s likely because their Z-Index is higher than the shader node’s.

Now, let’s see how to calculate the screen coordinate to reflect:

|

| get_mirrored_coordinate() method |

This method tests your understanding of the different coordinate types used in a 2D shader.

In 2D, you have two types of coordinates:

UV coordinates: These have X and Y components, both ranging from 0 to 1. In the rectangle of the water sprite that we want to render with our shader, the origin of the coordinates is the top-left corner of the rectangle. X increases to the right, and Y increases downward. The bottom-right corner of the water rectangle corresponds to the coordinate limit (1, 1).

SCREEN_UV coordinates: These are oriented the same way as UV coordinates, but the origin is in the top-left corner of the screen, and the coordinate limit is at the bottom-right corner of the screen.

Note that when the water sprite is fully displayed on the screen, the UV coordinates span a subset of the SCREEN_UV coordinates.

To better understand how the two types of UV coordinates work, refer to the following diagram:

|

| UV coordinate types |

The diagram schematically represents the scene we’re working with. The red area represents the image displayed on the screen, which in our case includes the sky, clouds, moon, mountain, tree, grass, and water. The blue rectangle represents the water, specifically the rectangular sprite where we’re applying the shader.

Both coordinate systems start in the top-left corner. The SCREEN_UV system starts in the screen’s top-left corner, while the UV system starts in the top-left corner of the sprite being rendered. In both cases, the end of the coordinate system (1, 1) is in the bottom-right corner of the screen and the sprite, respectively. These are normalized coordinates, meaning we always work within the range of 0 to 1, regardless of the element’s actual size.

To explain how to calculate the reflection, I’ve included a blue triangle to represent Mount Fuji. The red area is the mountain itself, while the triangle in the blue area represents its reflection.

Suppose our shader is rendering the coordinate (0.66, 0.66), as represented in the diagram (please note, the measurements are approximate). The shader doesn’t know what color to show for the reflection, so it needs to sample the color from a point in the red area. But which point?

Calculating the X-coordinate of the reflected point is easy because it’s the same as the reflection point: 0.66.

The trick lies in the Y-coordinate. If the reflection point is at UV.Y = 0.66, it means it's 1 - 0.66 = 0.33 away from the bottom edge (rounded to two decimal places for clarity). In our case, where the image to be reflected is above and its reflection appears below, the natural expectation is that the image will appear vertically inverted. Therefore, if the reflection point was 0.33 away from the bottom edge of the rectangle, the reflected point will be 0.33 away from the top edge of the screen. Thus, the Y-coordinate of the reflected point will be 0.33. This is precisely the calculation done in line 11 of the get_mirrored_coordinate() method.

So, as the shader scans the rectangle from left to right and top to bottom to render its points, it samples the screen from left to right and bottom to top (note the difference) to acquire the colors to reflect.

This process has two side effects to consider.

The first is that if the reflection surface (our shader’s rectangle) has less vertical height than the reflected surface (the screen), as in our case, the reflection will be a "squashed" version of the original image. You can see what I mean in the image at the start of the article. In our case, this isn’t a problem; it’s even desirable as it gives more depth, mimicking the effect you'd see if the water’s surface were longitudinal to our line of sight.

The second side effect is that, as we scan the screen to sample the reflected colors, there will come a point where we sample the lower third of the screen where the water rectangle itself is located. An interesting phenomenon will occur: What will happen when the reflection shader starts sampling pixels where it’s rendering the reflection? In the best case, the color sampled will be black because we’re trying to sample pixels that haven’t been painted yet (that’s precisely the job of our shader). So what will be reflected is a black blotch. To avoid this, we must ensure that our sampling doesn’t dip below the screen height where the water rectangle begins.

Using our example from the image at the beginning of the article, we can estimate that the water rectangle occupies the lower third of the screen. Therefore, sampling should only take place between SCREEN_UV.Y = 0 and SCREEN_UV.Y = 0.66. To achieve this, I use line 13 of get_mirrored_coordinate(). This mix() method allows you to obtain the value of its third parameter within a range defined by the first two. For example, mix(0, 0.66, 0.5) would point to the midpoint between 0 and 0.66, giving a result of 0.33.

By limiting the vertical range of the pixels to sample for reflection, we ensure that only the part of the screen we care about is reflected.

With all this in place, we now have the screen coordinate that we need to sample in order to get the color to reflect in the pixel our shader is rendering (line 15 of get_mirrored_coordinate()).

This coordinate will then be used in line 38 of the fragment() method to sample the screen.

Once the color from that point on the screen is obtained, we could directly assign it to the COLOR property of the pixel being rendered by our shader. However, this would create a reflection with colors that are exactly the same as the reflected object, which is not very realistic. Typically, a reflective surface will overlay a certain tint on the reflected colors, due to dirt on the surface or the surface's own color. In our case, we will assume the water is blue, so we need to blend a certain amount of blue into the reflected colors. This is handled by the get_resulting_water_color() method, which is called from line 40 of the main fragment() method.

|

| get_resulting_water_color() method |

The main effect of this method is that the water becomes more blue as you get closer to the bottom edge of the water rectangle. Conversely, the closer you are to the top edge, the more the original reflected colors should dominate. For this reason, the mix() method is used in line 29 of get_resulting_water_color(). The higher the third parameter (water_color_mix), the closer the resulting color will be to the second parameter (water_color, set in the inspector). If the third parameter is zero, mix() will return the first parameter's color (highlighted_color).

From this basic behavior, there are a couple of additional considerations. In many implementations, UV.Y is used as the third parameter of mix(). However, I chose to add the option to configure a limit on the maximum intensity of the water's color. This is done in lines 25-28 using the clamp() method. This method will return the value of currentUV.y as long as it falls within the range limited by 0.0 and water_color_intensity. If the value is below the lower limit, the method returns 0.0, and if it exceeds the upper limit, it will return the value of water_color_intensity. Since the result of clamp() is passed to mix(), this ensures that the third parameter's value will never exceed the limit set in the inspector, via the uniform water_color_intensity.

Another consideration is that I’ve added a brightness boost for the reflected images. This is done between lines 20 and 22. I’ve configured a uniform called mirrored_colors_intensity to define this boost in the inspector. In line 22, this value is used to increase the three color components of the reflected color, which in practice increases the brightness of the color. In line 22, I also ensure that the resulting value does not exceed the color limits, although this check may be redundant.

The Occluder

Remember that we mentioned this shader can only reflect sprites that were drawn before the shader itself. In other words, it can only reflect sprites with a lower Z-Index than the shader. Since we want to reflect all sprites (even partially the grass sprite), this means the water rectangle needs to be placed above all other elements.

If we simply place the water rectangle above everything else, the result would look like this:

|

| Water without occluder |

It would look odd for the shoreline to be perfectly straight. That’s why I’ve added an occluder.

An occluder is a sprite fragment that acts like a patch to cover something. It usually shares the color of the element it overlaps so that they appear to be a single piece. In my case, I’ve used the following occluder:

|

| Occluder |

The occluder I used has the same color as the grass, so when placed over the lower part of the grass, it doesn't look like two separate pieces. On the other hand, by covering the top edge of the water rectangle, it makes the shoreline more irregular, giving it a more natural appearance.

The result is the image that opened this article, which I will reproduce here again to show the final result in detail:

|

| Water with occluder |

With this, I conclude this article. In a future article, I will cover how to implement some movement in the water to bring it to life.