Virtualization is all about deception.

With heavy virtualization (i.e. VMware, Virtualbox, Xen) a guest operating system is deceived to think it is running in a dedicated hardware, while it is actually shared.

With light virtualization (i.e Docker) an application is deceived to think it is using a dedicated operating system kernel, while it is actually shared too. It happens that in Linux everything but the kernel is considered an application so with Docker you can make multiple linux distribution share the same kernel (the one from host system). As this is a higher abstraction level of deception than the kind of VMware it consumes less resources, that's why is called light virtualization. It's so light that many applications are distributed in docker packages (called containers) so application is bundled along and operating system and its dependencies to be run all at once in another system and don't mess with its respective dependencies.

Sure there are things light virtualization cannot do but they are not many. For standard "level-7" application development you won't find any limitation using Docker virtualization.

Installation

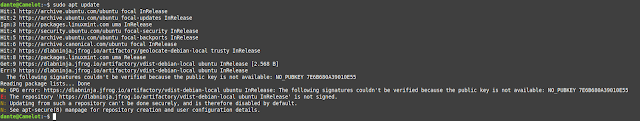

In the past you could install docker from your distribution package repository. Nowadays you may find docker package in your usual package repository. But that no longer works. If you want to install docker in your computer you should ignore those packages available in your standard repositories and use official Docker repositories.

Docker provides package repositories for many distributions. For instance, here you can find instructions to install docker in an ubuntu distribution. Just be aware that if you are using an Ubuntu derivative, like Linux Mint, you're going to need to customize those instructions to set your version in apt sources list.

Once you have installed docker in your computer, you can run a Hello World app, bundled in a container, to check everything works:

dante@Camelot:~/$ sudo docker run hello-world

[sudo] password for dante:

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

b8dfde127a29: Pull complete

Digest: sha256:7d91b69e04a9029b99f3585aaaccae2baa80bcf318f4a5d2165a9898cd2dc0a1

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

dante@Camelot:~$

Be aware that although you might we able to run docker containers you may not be able to download and install them without sudo. If you are not comfortable working like that you can add yourself to docker group:

dante@Camelot:~/$ sudo usermod -aG docker $USER

[sudo] password for dante:

dante@Camelot:~$

You may need to log out and re log to activate change. This way you can run docker commands as an unprivileged user.

Usage

You can make your own custom containers, but that is a topic for another article. In this article we are going to use containers customized by others.

To find available containers, head to Docker Hub and type any application or Linux distribution in its search field. Output will show you many options. If you're looking for a raw linux distribution you'd better use those tagged as "Official image".

Guess you want to try an app in an Ubuntu Xenial, then select Ubuntu is search output and take a look to "Supported tags..." sections. There you can find how different versions are named to be downloaded. In our case we would take note of "xenial" or "16.04" tags.

Now that you know what to download, let's do it with docker pull:

dante@Camelot:~/$ docker pull ubuntu:xenial

xenial: Pulling from library/ubuntu

528184910841: Pull complete

8a9df81d603d: Pull complete

636d9303bf66: Pull complete

672b5bdcef61: Pull complete

Digest: sha256:6a3ac136b6ca623d6a6fa20a7622f098b2fae1ac05f0114386ef439d8ca89a4a

Status: Downloaded newer image for ubuntu:xenial

docker.io/library/ubuntu:xenial

dante@Camelot:~$

What you've done is download what is called an image. An image is a base package with an specific linux distribution and applications. From that base package you can derive your own custom packages or run instances of that base packages, those instances are what we call containers.

If you want to check how many images you have locally available just run docker images:

dante@Camelot:~/$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu xenial 38b3fa4640d4 4 weeks ago 135MB

hello-world latest d1165f221234 5 months ago 13.3kB

dante@Camelot:~$

You can start instances (aka containers) from those images using docker run:

dante@Camelot:~/$ docker run --name ubuntu_container_1 ubuntu:xenial

dante@Camelot:~$

Using --name you can assign an specific name to your container to identify it from other containers started from the same image.

Problem starting containers this way is that they close inmediately. If you check your containers status using docker ps:

dante@Camelot:~/$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7baad2f7e56 ubuntu:xenial "/bin/bash" 12 seconds ago Exited (0) 11 seconds ago ubuntu_container_1

3ba89f1f37c6 hello-world "/hello" 9 hours ago Exited (0) 9 hours ago focused_zhukovsky

dante@Camelot:~$

I've used an -a flag to show every container, not only the active ones. That way you can see that ubuntu_container_1 ended its activity almost at once since start. That happens because docker containers are designed to run an specific application and close themselves when that application ends. We did not said which application to run in the container so it just closed.

Before trying anything else let's delete previous container, using docker rm, to start from scratch:

dante@Camelot:~/$ docker rm ubuntu_container_1

ubuntu_container_1

dante@Camelot:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3ba89f1f37c6 hello-world "/hello" 10 hours ago Exited (0) 10 hours ago focused_zhukovsky

dante@Camelot:~$

Now we want to keep our container alive to access its console. One way is this:

dante@Camelot:~/$ docker run -d -ti --name ubuntu_container_1 ubuntu:xenial

a14e6bcac57dd04cc777a4eac787a8465acd5b8d379591976c56de1d0acc2798

dante@Camelot:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a14e6bcac57d ubuntu:xenial "/bin/bash" 8 seconds ago Up 7 seconds ubuntu_container_1

3ba89f1f37c6 hello-world "/hello" 10 hours ago Exited (0) 10 hours ago focused_zhukovsky

dante@Camelot:~$

We've used -d flag to run container in the background and -ti to start and interactive shell and keep it open. Doing so we can see that this time container stays up. But we are not yet in container console, to access it we must do docker attach to connect with that container shell:

dante@Camelot:~/$ dante@Camelot:~$ docker attach ubuntu_container_1

root@a14e6bcac57d:/#

Now you can see that shell has changed its left identifier. You can leave container console using exit, but that stops container. To leave container keeping it active use Ctrl+p followed by Ctrl+q instead.

You can pause an idle container to save resources and resume it later using docker stop and docker start:

dante@Camelot:~/$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9727742a40bf ubuntu:xenial "/bin/bash" 4 minutes ago Up 4 minutes ubuntu_container_1

3ba89f1f37c6 hello-world "/hello" 10 hours ago Exited (0) 10 hours ago focused_zhukovsky

dante@Camelot:~$ docker stop ubuntu_container_1

ubuntu_container_1

dante@Camelot:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9727742a40bf ubuntu:xenial "/bin/bash" 7 minutes ago Exited (127) 6 seconds ago ubuntu_container_1

3ba89f1f37c6 hello-world "/hello" 10 hours ago Exited (0) 10 hours ago focused_zhukovsky

dante@Camelot:~$ docker start ubuntu_container_1

ubuntu_container_1

dante@Camelot:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9727742a40bf ubuntu:xenial "/bin/bash" 8 minutes ago Up 4 seconds ubuntu_container_1

3ba89f1f37c6 hello-world "/hello" 10 hours ago Exited (0) 10 hours ago focused_zhukovsky

dante@Camelot:~$

Saving your changes

Chances are that you want export changes made to an existing container so you can start new container with those changes already applied.

Best way is using dockerfiles, but I'll leave that for a further article. A quick and dirty way is to commit your changes to a new image with docker commit:

dante@Camelot:~/$ docker commit ubuntu_container_1 custom_ubuntu

sha256:e2005a0ec8302f8948958a90e2abc1e8957a15155c6e6bbf7300eb1709d4ae70

dante@Camelot:~$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

custom_ubuntu latest e2005a0ec830 5 seconds ago 331MB

ubuntu xenial 38b3fa4640d4 4 weeks ago 135MB

ubuntu latest 1318b700e415 4 weeks ago 72.8MB

hello-world latest d1165f221234 5 months ago 13.3kB

dante@Camelot:~$

In this example we created a new image called custom_ubuntu. Using that new image we can create new instances with the changes made so far to ubuntu_container_1.

We aware that commiting changes from a running container stops it to avoid data corruption. After commiting is ended container is resumed.

Running services from containers

So far you have a lightweight virtual machine and you have access to its console, but that is not enough as you'll want offer services from that container.

Guess you have configured a SSH server in your container and you want to access it from your LAN. In that case you need container ports to be mapped by its host and offered to LAN.

An important gotcha here is that port mapping should be configured when a container is first time started with docker run using -p flag:

dante@Camelot:~/$ docker run -d -ti -p 8888:22 --name custom_ubuntu_container custom_ubuntu

5ea2604da8c696af397cd1b1f98d7426dd68182e5edd81a56a6b739849ba1e84

dante@Camelot:~$

Now you can access to the container SSH service through host 8888 port.

Sharing files with containers

Our containers won't be isolated islands. They may need files from us or the may retrieve files to us.

One way to do that file sharing is starting containers mounting a host folder as a shared folder:

dante@Camelot:~/$ docker run -d -ti -v $(pwd)/docker_share:/root/shared ubuntu:focal

ee64e7392a77b4d0b35adadad467fe5d6a105b84290945e346f82199116b7c56

dante@Camelot:~$

Here, host folder docker_share will be accessible from container at /root/shared container path. Be aware you should enter absolute paths. I use $(pwd) as a shortcut to enter host current working folder.

Once your container is started, every file placed at its /root/shared folder will be visible from host, even after container is stopped. The other way round, that is placing a file from host to be seen at container, is possible but you will need to do sudo:

dante@Camelot:~/$ cp docker.png docker_share/.

cp: cannot create regular file 'docker_share/./docker.png': Permission denied

dante@Camelot:~/$ sudo cp docker.png docker_share/.

[sudo] password for dante:

dante@Camelot:~$

Another way of sharing is using built-in copy command:

dante@Camelot:~/$ docker cp docker.png vigorous_kowalevski:/root/shared

dante@Camelot:~$

Here, we have copied docker.png host file to vigorous_kowalevski container /root/shared folder. Note that we didn't need sudo to run docker cp.

Other way round is also possible, just change argument order:

dante@Camelot:~/$ docker cp vigorous_kowalevski:/etc/apt/sources.list sources.list

dante@Camelot:~$

Here we copied container sources.list to host.

From here

So far you know how to deal with docker containers. Next step is creating your own custom images using dockerfiles and sharing them through Docker Hub. I'm going to explain those topics in a further article.