Many people dislike this methodology because they find it boring to start with tests and prefer to dive straight into writing code and then test it manually. They often justify this by claiming they can't afford to waste time writing tests. The problem arises when their project starts growing in size and complexity, and introducing new features becomes a nightmare because older functionalities break. This often happens unnoticed, as it becomes impossible to manually test the entire application with every change. Consequently, hidden bugs accumulate with each update. By the time one of these bugs is discovered, it becomes incredibly difficult to determine which update caused it, making the resolution process a torment.

With TDD, this doesn't happen because the tests you design for each feature are added to the test suite and can be automatically re-executed when incorporating new functionalities. When a new feature breaks something from previous ones, the corresponding tests will fail, serving as an alarm to indicate where the problem lies and allowing you to resolve it efficiently.

Games are just another application. TDD can be applied equally to them, and that's why all engines have automated testing frameworks. In this article, we will focus on one of the most popular in Godot Engine: GdUnit4.

GdUnit4 allows test automation using both GdScript and C#. Since I use the latter to develop in Godot, we will focus on it throughout this article. However, if you're a GdScript user, I recommend you keep reading because many concepts are similar in that language.

Plugin Installation

To install it, go to the AssetLib tab at the top of the Godot editor. There, search for "gdunit4" and click on the result.

|

| GdUnit4 in the AssetLib |

In the pop-up window, click "Download" and accept the default installation folder.

After doing this, the plugin will be installed in your project folder but will remain disabled. To enable it, go to Project --> Project Settings... --> Plugins and check the Enabled box.

|

| GdUnit4 plugin activation |

I recommend restarting Godot after enabling the plugin; otherwise, errors might appear.

C# Environment Configuration

The next step is to configure your C# environment to use GdUnit4 without issues.

Ensure you have .NET version 8.0.

Open your .csproj file and make the following changes in the <PropertyGroup> section:

- Change TargetFramework to net8.0.

- Add <LangVersion>11.0</LangVersion>.

- Add <CopyLocalLockFileAssemblies>true</CopyLocalLockFileAssemblies>.

Create an <ItemGroup> section (if it doesn’t already exist) and add the following:

<ItemGroup>

<PackageReference Include="Microsoft.NET.Test.Sdk" Version="17.9.0" />

<PackageReference Include="gdUnit4.api" Version="4.3.*" />

<PackageReference Include="gdUnit4.test.adapter" Version="2.*" />

</ItemGroup>

As an example, here's my .csproj file:

|

| A csproj file adapted for GdUnit4 |

If you rebuild your project (either from your IDE or MSBuild) without errors, your configuration is correct.

IDE Configuration

GdUnit4 officially supports Visual Studio, Visual Studio Code, and Rider. Configuration varies slightly between them.

I use Rider, so I'll focus on that IDE.

GdUnit4 expects an environment variable named GODOT_BIN with the path to the Godot executable. I use ChickenSoft's GodotEnv tool to manage Godot versions, which maintains a GODOT variable pointing to the editor executable. I created a user-level GODOT_BIN variable pointing to GODOT. If you don't use GodotEnv, manually set the path to the executable.

|

| Env var configuration in windows |

In Rider, ensure the Godot support plugin is enabled and enable VSTest adapter support:

Enable the "Enable VSTest adapters support" option and add a wildcard (*) in the "Projects with unit tests" list.

|

| Configuration for VSTest support |

Create a .runsettings file at your project root with the following content:

<?xml version="1.0" encoding="utf-8"?>

<RunSettings>

<RunConfiguration>

<MaxCpuCount>1</MaxCpuCount>

<ResultsDirectory>./TestResults</ResultsDirectory>

<TargetFrameworks>net7.0;net8.0</TargetFrameworks>

<TestSessionTimeout>180000</TestSessionTimeout>

<TreatNoTestsAsError>true</TreatNoTestsAsError>

</RunConfiguration>

<LoggerRunSettings>

<Loggers>

<Logger friendlyName="console" enabled="True">

<Configuration>

<Verbosity>detailed</Verbosity>

</Configuration>

</Logger>

</Loggers>

</LoggerRunSettings>

<GdUnit4>

<DisplayName>FullyQualifiedName</DisplayName>

</GdUnit4>

</RunSettings>

Point Rider to this file in its Test Runner settings.

|

| Path to .runsettings configuration |

Once all of the above is done, you need to restart Rider to apply the configuration.

If you want to verify that the setup is correct, you can create a folder named Tests in your project and, inside it, a C# file (you can call it ExampleTest.cs) with the following content:

|

| Test/ExampleTest.cs |

The example is designed so that Success() will be a passing test, while Failed() will intentionally fail.

You can run this test either from Rider or from the Godot editor.

In Rider, you first need to enable the test panel by navigating to View → Tool Windows → Tests. From the test panel, you can run an individual test or all tests at once.

|

| Test Execution Tab in Rider |

To run them from Godot, you need to go to the GdUnit tab.

|

| Test Execution Tab in Godot |

If the Godot tab doesn’t display the tests, it might not be looking in the correct folder. To verify this, click the tools button in the top-left corner of the tab and ensure that the "Test Lookup Folder" parameter points to the folder where your tests are located.

|

| Path to the Test Folder |

If the tests still don’t appear, I recommend restarting. Both Godot and Rider sometimes fail to detect changes or newly added tests. When this happens, restarting Godot or Rider (whichever is failing) usually resolves the issue. I assume this problem will be addressed over time. In any case, from the tests I’ve conducted, those run through Rider have consistently worked much better than those executed directly from the Godot editor.

Testing a Game

All the previous setup has been arduous, but the good thing is that you only need to do it once. From then on, it’s just a matter of creating tests and running them.

There are many things to test in a game. Unit tests focus on testing specific methods and functions, but I’m going to explain something broader: integration tests. These tests evaluate the game at the same level a player would, interacting with objects and verifying whether the game reacts as expected. For this purpose, it’s common to create special test levels, where functionalities are concentrated to allow for quick and efficient testing.

To make this clear, let’s use a simple example. Imagine we have a game where there’s an element (an intelligent agent) that must move towards a specific marker's position. To verify the agent's correct behavior, we would place it at one end of the scenario, the marker at the other, and wait a few seconds before evaluating the agent's position. If it has moved close enough to the marker, we can conclude that it’s working correctly.

You can download the Godot code for this example from a specific commit in one of my repositories. If you open it in the Godot editor, load the scene Tests/TestLevels/SimpleBehaviorTestLevel.tscn, set it as the main scene (Project → Project Settings... → General → Application → Run → Main Scene) and run the game, you’ll see that it behaves just as described earlier: the red crosshair will move to where you click, and the green ball will move towards the crosshair’s position. So, manually, we can verify that the game behaves as expected. Now let’s automate this verification.

|

| The game we’re testing |

The first step is to set up the level with the necessary elements for testing. These auxiliary elements would only get in the way in the levels accessed by players, which is why dedicated test levels are usually created (hence, this one is in the folder Tests/TestLevels).

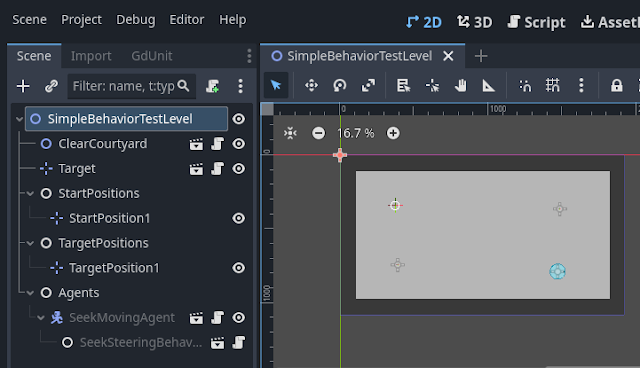

Our test level has the following structure:

|

| Test Level Structure |

The elements are as follows:

- ClearCourtyard: This is the box where our agent moves. It’s a simple TileMap where I’ve drawn dark gray walls (impassable) and a light gray floor (where movement happens).

- Target: This is a scene whose main node is a Marker2D, with a Sprite2D underneath containing the crosshair image. The main node has a script that listens to Input events and reacts to left mouse button clicks, placing itself at the clicked screen position.

- SeekMovingAgent: This is the agent whose behavior we want to verify.

- StartPosition1: This is the position where we want the agent to start at the beginning of the test.

- TargetPosition1: This is the position where we want the Target to start at the beginning of the test.

The code for our test will consist of one or more C# files located in the Tests folder. In this case, we’ll only have one file, but we could have several if we were testing different functionalities. Each file can contain multiple tests. A test is essentially a method marked with the [TestCase] attribute. The class containing that method must be marked with the [TestSuite] attribute so that the test runner includes it when executing tests.

Our example test is located in the file Tests/SimpleBehaviorTests.cs.

The first half of the file focuses on preparations.

|

| Test Preparation |

As you can see in line 9, I’ve stored the path to the level we want to test in a constant variable.

We load this level in line 17. This line belongs to the method LoadScene(), which we can name however we like as long as we mark it with the [BeforeTest] attribute. This signals that LoadScene() should run just before each test. This way, we ensure that the level starts fresh each time, and that previous tests don’t interfere with subsequent ones.

There are other useful attributes:

- [Before]: Runs the decorated method once before all tests in the class execute. Useful for initializing resources shared across tests that don’t need resetting.

- [AfterTest] and [After]: These are the counterparts of [BeforeTest] and [Before], used for cleaning up after tests.

Once we have a reference to the freshly loaded level, we can start the test:

|

| The code for our test |

Between lines 24 and 30, we gather references to the test elements using the FindChild() method. In each call, we pass the name of the node we want to retrieve. Since FindChild() returns a Node type, we need to cast it to its actual type.

Once we have the references to the elements, we place them at their starting positions. In line 33, we place _target at the position marked by _targetPosition. In line 34, we place _movingAgent at the position marked by _agentStartPosition.

We could have set these positions via code, but if they need adjustment later, it’s much easier to tweak them visually in the editor rather than editing the code.

In line 37, we activate the agent so it starts moving. In general, I recommend keeping agents deactivated by default and activating only those needed for each specific test, avoiding interference.

In line 39, we wait for 2.5 seconds, which we estimate is sufficient for the agent to reach the target.

Finally, the moment of truth arrives. In line 41, we measure the distance between the agent and the target. If this distance is smaller than a tiny threshold (as floating-point math isn’t perfectly precise), we assume the agent successfully reached its target (line 43).

Conclusion

Like this example, many different tests can be created. The usual approach is to group related tests into a single class (a .cs file) for organizational clarity.

This test was highly visual, as it evaluated movement through position changes. However, other tests might focus on internal values of the agent, verifying specific properties using assertions.

Lastly, I highly recommend checking out the official GdUnit4 documentation. While originally focused on GDScript, it has started supporting C#. The documentation contains specific installation guidelines for C# and example code with tabs for both languages.