You usually start doing everything manually but at some point you realize its better to keep everything in scripts. Self made building scripts may be enough for many personal projects but sometimes your functional test last longer than you want to stay in front of your computer. Leaving functional test to others is the solution for that problem and then is when Travis-CI appears.

Travis-CI provides an automation environment to run your functional tests and any script you want afterwards depending on your tests success or failure. You may know Jenkins, actually Travis-CI is similar but simpler and easier. If you are in an enterprise production environment you probably will use Jenkins for continuous integration but if you're with a personal project Travis-CI may be more than enough. Nowadays Travis-CI is free for personal open source projects.

When you register in Travis-CI you are asked to connect to a GitHub account, so you cannot use Travis without one. From there on you will always be asked to log in GitHub to enter your Travis account.

Once inside your Travis account you can click the "+" icon to link any of your GitHub repositories that you want to link to Travis. After you switch every Github repository you want to build from Travis you must include in their root a file called .travis.yml (notice dot at the very beginning), its content tell Travis what to do when he detects a git push over repository.

To have something as an example let assess travis configuration for vdist. You have many other examples in Travis documentation but I think vdist building process covers enough Travis features to give you a good taste of what can you achieve with it. Namely, we are going to study an steady snapshot of vdist's .travis.yml file. So, please, keep an open window with that .travis.yml code while you read this article. I'm explaining an high level overview of workflow and aferwards we're going to explain in deep every particular step of that workflow.

Workflow that travis.yml describes is this:

|

| vdist build workflow (click on it to see it bigger) |

When I push to staging branch at Github, Travis is notified by Github through a webhook and then it downloads latest version of your source code and looks for a .travis.yml in its root. With that file Travis can know which workflow to follow.

Namely with vdist, Travis looks for my functional test and run them using a Python 3.6 interpreter and the one marked as nightly in Python repositories. That way I can check my code runs fine in my target Python version (3.6) and I can find in advance any trouble that I can have with next planned release of Python. If any Python 3.6 test fails building process stops and I'm emailed with a warning. If any nightly Python version fails I'm emailed with a warning but building process continues because I only support stable Python releases. That way I can know if I have to workout any future problem with next python release but I let build proces continues if tests succeed with current stable Python version.

If tests suceed staging branch is merged with master branch at Github. Then Github activates two webhooks to next sites:

- Docker Hub: To rebuild some optimized docker images vdist uses when it packages an application. I'm writing an article about Docker Hub as I already did with Read the Docs.

- Read the Docs: To rebuild documentation site using markdown documents in docs/ folder from vdist repository.

While Github merges branches and activates webhooks, Travis starts packaging process and deploys generated packages to some public repositories. Packages are generated in three main flavours: wheel package, deb package and rpm package. Wheel pakages are deployed to Pypi, while deb and rpm one are deployed to my Bintray repository and to vdist Github releases page.

Travis modes

When Travis is activated by a push in your repository it begins what is called as a build.

A build generates one or more jobs. Every job clones your repository into a virtual environment and then carries out a series of phases. A job finishes when it accomplishes all of its phases. A build finishes when all of its jobs are finished.

Travis default mode involves a lifecycle with these main phases for its jobs:

- before_install

- install

- before-script

- script

- after_sucess or after_failure

- before_deploy

- deploy

- after_deploy

- after_script

Initial configuration

In those lines you set Travis general setup.

You first set your project native language using tag "language" so Travis can provide a virtual environment with proper dependencies installed.

Travis provides two kinds of virtual environments: default virtual environment is a docker linux container, that is lightweight and so it is very quick to be launched; second virtual environment is a full weight virtualized linux image, that takes longer to be launched but sometimes allow you things that containers don't. For instance, vdist uses docker for its building process (that's why I use docker "services" tag), so I have to use Travis full weight virtual environment. Otherwise, if you try running docker inside a docker container you're going to realize it does not work. So, to launch a full weight virtual environment you should set a "sudo: enabled" tag.

By default Travis uses a rather old linux version (Ubuntu Trusty). By the time I wrote this article there were promises about a near availability of a newer version. They say keeping environment at the latest ubuntu release takes too much resources so they update them less frequently. When update arrives you can ask to use it changing "dist: trusty" for whatever version they make available.

Sometimes you will find that using an old linux environment does not provide you with dependencies you actually need. To help with that Travis team try to maintain a customizedly updated Trusty image available. To use that specially updated version you should use "group: travis_latest" tag.

Test matrix

From lines 14 to 33 you have:

There, under "python:" tag, you set under which versions of python interpreter you want to run your tests.

You might need to run test depending not only on python interpreter versions but depending of multiples environment variables. Setting them under "matrix:" is your way to go.

You can set some conditions to be tested and be warned if they fail but not to make end the entire job. Those conditions use "allow_failures" tag. In this case I let my build continue if test with a under development (nightly) version of python fails, that way I'm warned that my application can fail with a future release of python but I let it be packaged while tests with official release of python work.

You can set global environment variables to be used by your building scripts using "global:" tag.

If any of those variables have values dangerous to be seen in a public repository you can cypher them using travis tools. First make sure you have travis tools installed. As it is a Ruby client you first have to install that interpreter:

dante@Camelot:~/$ sudo apt-get install python-software-properties dante@Camelot:~/$ sudo apt-add-repository ppa:brightbox/ruby-ng dante@Camelot:~/$ sudo apt-get update dante@Camelot:~/$ sudo apt-get install ruby2.1 ruby-switch dante@Camelot:~/$ sudo ruby-switch --set ruby2.1

Then you can use Ruby package manager to install Travis tool.

dante@Camelot:~/$ sudo gem install travis

With travis tool installed you can now ask it to cypher whatever value you want.

dante@Camelot:~/$ sudo travis encrypt MY_PASSWORD=my_super_secret_password

It will output a "secure:" tag followed by a apparently random string. You can now copy "secure:" tag and cyphered string to your travis.yaml. What we've done here is using travis public key to cypher our string. When Travis is reading our travis.yaml file it will use its private key to decypher every "secure:" tag it finds.

Branch filtering

This code comes from lines 36 to 41 of our .travis.yaml example. By default Travis activates for every push in every branch in your repository but usually you want to restrict activation to feature branches only. In my case I activate builds in pushes just over "staging" branch.

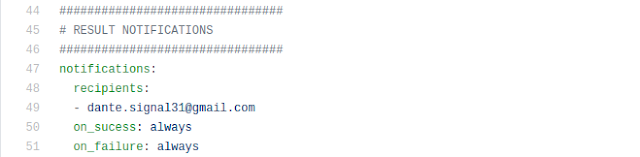

Notifications

As you can see at lines 44 to 51, you can setup which email recipient should be notified either or both in success or failure of tests:

Testing

From line 54 to 67 we get to testing, the real core of our job:

As you can see, actually it comprises three phases: "before_install", "install" and "script".

You can use those phases in the way more comfortable for you. I've used "before_install" to install all system packages my tests need, while I've used "install" to install all python dependencies.

You launch your tests at "script" phase. In my case I use pytest to run my tests. Be aware that Travis waits for 10 minutes to receive any screen output from your test. If none is received then Travis thinks that test got stuck and cancel it. This behavior can be a problem if is normal for your test to stay silent for longer than 10 minutes. In that case you should launch your tests using "travis_wait N" command where N is the number of minutes we want our waiting time to extend by. In my case my tests are really long so I ask travis to wait 30 minutes before giving up.

Stages

In our example files stages definitions are from line 71 to 130.

Actually configuration so far is not so different than it would be if we were using Travis default mode. Where big differences really begin is when we find a "jobs:" tag because it marks the beginning of stages definition. From there every "stage" tag marks the start of an individual stage definition.

As we said, stages are executed sequentially following the same order they have in travis.yaml configuration. Be aware that if any of those stages fails the job is ended at that point.

You may ask yourself why testing is not defined as a stage, actually it could be and you should if you wanted alter the usual order and not execute tests at the very beginning. If test are not explicitly defined as a stage then they are executed at the begining, as the first stage.

Let assess vdist stages.

Stage to merge staging branch into master one

From line 77 to 81:

If tests have been successful we are pretty sure code is clean so we merge it into master. Merging into master has the side effect of launching ReadTheDocs and Docker Hub webhooks.

To keep my main travis.yaml clean i've taken all the code needed to do the merge to an outside script. Actually that script runs automatically the same console commands we would run to perform the merge manually.

Stage to build and deploy to an artifact service

So far you've checked your code is OK, now it's time to bundle it in a package and upload it to wherever your user are going to download it.

There are many ways to build and package your code (as many as programming languages) and many services to host your packages. For the usual packages hosting services Travis has predefined deployment jobs that automated much of the work. You have an example of that in lines 83-94:

There you can se a predefined deployment job for python packages consisting in two steps: packaging code to a wheel package and uploading it to Pypi service.

Arguments to provide to a predefined deployment jobs varies for each of them. Read Travis instructions for each one to learn how to configure the one you need.

But there are times the package hosting service is not included in the Travis supported list linked before. When that happens things get more manual, as you can ever use an script tag to run custom shell commands. You can see it in lines 95-102:

In those lines you can see how I call some scripts defined in folders of my source code. Those scripts use my own tools to package my code in rpm an deb packages. Although in following lines I've used Travis predefined deployment jobs to upload generated packages to hosting services, I could have scripted that too instead. All package hosting services provide a way to upload packages using console commands, so you always have that way if Travis does not provides a predefined job to do it.

Debugging

Often your Travis setup won't work on the first run so you'll need debug it.

To debug your Travis build your first step is reading your build output log. If log is so big that Travis does not show it entirely in browser then you can download raw log and display it in your text editor. Search in your log for any unexpected error. Later try to run your script in a virtual machine with the same version Travis uses. If error found in Travis build log repeats in your local virtual machine then you have all you need to find out what does not work.

Things get complicated when error does not happen in your local virtual machine. Then error resides in any particularity in Travis environment that cannot be replicated in your virtual machine, so you must enter in Travis environment while building and debug there.

Travis enables by default debugging for private repositories, if you have one then you'll find a debug button just below your restart build one:

If you push that button build will start but, after the very initial steps, it will pause and open a listening ssh port for you to connect. Here is an example output of what you see after using that button:

Debug build initiated by BanzaiMan Setting up debug tools. Preparing debug sessions. Use the following SSH command to access the interactive debugging environment: ssh DwBhYvwgoBQ2dr7iQ5ZH34wGt@ny2.tmate.io This build is running in quiet mode. No session output will be displayed. This debug build will stay alive for 30 minutes.

In last example you would connect with ssh to DwBhYvwgoBQ2dr7iQ5ZH34wGt@ny2.tmate.io to get access to a console in Travis build virtual machine. Once inside your build virtual machine you can run every build step calling next commands:

travis_run_before_install

travis_run_install

travis_run_before_script

travis_run_script

travis_run_after_success

travis_run_after_failure

travis_run_after_script

Those command will activate respective build steps. When expected error appear at last you can debug environment to find out what is wrong.

Problem is that debug button is not available for public repositories, but don't panic you still can use that feature but you'll need to do some extra steps. To enable debug feature you should ask for it to Travis support through email to support@travis.ci.com. They will grant you in just a few hours.

Once you receive confirmation from Travis support about debug is enabled, i'm afraid you won't see debug button yet. The point is that although debug feature is enabled, for public repositories you can call it through an api call only. You can launch that api call from console with this command:

dante@Camelot:~/project-directory$ curl -s -X POST \ -H "Content-Type: application/json" \ -H "Accept: application/json" \ -H "Travis-API-Version: 3" \ -H "Authorization: token ********************" \ -d "{\"quiet\": true}" \ https://api.travis-ci.org/job/{id}/debug

To get your token you should run this commands before:

dante@Camelot:~/project-directory$ travis login We need your GitHub login to identify you. This information will not be sent to Travis CI, only to api.github.com. The password will not be displayed. Try running with --github-token or --auto if you don't want to enter your password anyway. Username: dante-signal31 Password for dante-signal31: ***************************************** Successfully logged in as dante-signal31! dante@Camelot:~/project-directory$ travis token Your access token is **********************

In order to get a valid number for {id} you need to enter to the last log of the job you want to repeat and expand "Build system information" section. There, you can find the number you need at "Job id:" line.

Just after launching api call you can ssh to your Travis build virtual machine and start your debugging.

To end debugging session you can either close all your ssh windows or cancel build from web user interface.

But you should be aware about a potential danger. Why this debug feature is not available by default for public repositories? because ssh listening server has no authentication so anyone who knows where to connect will get console access to the same virtual machine. How an attacker would know where to connect? watching your build logs, if your repository is not private your travis logs are public by default at realtime, did you remember?. If and attacker is watching your logs and in that very moment you start a debug session she will see the same ssh connection string than you and will be able to connect to virtual machine. Inside Travis virtual machine the attacker can echo your environment variables and any secrets you have in them. Good news are that any new ssh connection will be attached to the same console session so if an intruder sneaks you will see her commands echoing in your session, giving you a chance to cancel debug session before she gets anything critical.