As with other articles, you can find the code for this one in my GitHub repository. I recommend downloading it and opening it locally in your Godot editor to follow along with the explanations, but make sure to download the code from the specific commit I’ve linked to. I’m constantly making changes to the project in the repository, so if you download code from a later commit than the one linked, what you see might differ from what I show here.

I don’t want to repeat explanations, so if you’re not yet familiar with UV coordinates in a Godot shader, I recommend reading the article I linked to at the beginning.

Therefore, we are going to evolve the shader we applied to the rectangle of the water sprite. The code for that shader is in the file Shaders/WaterShader.gdshader.

To parameterize the effect and configure it from the inspector, I have added the following uniform variables:

|

| Shader parameters configurable from the inspector |

The wave_strength and wave_speed parameters are intuitive: the first defines the amplitude of the distortion of the reflected image, while the second defines the speed of the distortion movement.

However, the wave_threshold parameter requires some explanation. If you place a mirror at a person’s feet, you expect the reflection of those feet to be in contact with the feet themselves. The reflection is like a shadow—it typically begins from the feet. The problem is that the algorithm we will explore here may distort the image from the very top edge of the reflection, causing it not to be in contact with the lower edge of the reflected image. This can detract from the realism of the effect, so I added the wave_threshold parameter to define at what fraction of the sprite the distortion effect begins to apply. In my example, it’s set to 0.11, which means that the distortion will start at a distance from the top edge of the water sprite equivalent to 11% of its width (i.e., UV.y = 0.11).

Finally, there is the wave_noise parameter. This is an image. For a parameter like this, we could use any kind of image, but for our distortion algorithm, we’re interested in a very specific one—a noise image. A noise image contains random black and white patches. Those who are old enough might remember the image seen on analog TVs when the antenna was broken or had poor reception; in this case, it would be a very similar image. We could search for a noise image online, but fortunately, they are so common that Godot allows us to generate them using a NoiseTexture2D resource.

|

| Configuring the noise image |

A configuration like the one shown in the figure will suffice for our case. In fact, I only changed two parameters from the default configuration. I disabled Mipmaps generation because this image won’t be viewed from a distance, and I enabled the Seamless option because, as we will see later, we will traverse the image continuously, and we don’t want noticeable jumps when we reach the edge. Lastly, I configured the noise generation algorithm to FastNoiseLite, though this detail is not crucial.

The usefulness of the noise image will become apparent now, as we dive into the shader code.

To give you an idea of the values to configure for the above variables, I used the following values (I didn’t include in the screenshot the parameters configured in the previous article for the static reflection):

|

| Shader parameter values |

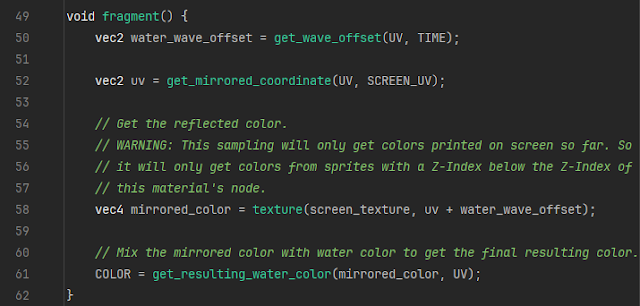

Taking the above into account, if we look at the main function of the shader, fragment(), we’ll see that there’s very little difference from the reflection shader.

|

| fragment() shader method |

If we compare it to the code from the reflections article, we’ll notice the new addition on line 50: a call to a new method, get_wave_offset(), which returns a two-dimensional vector that is then added to the UV coordinate used to sample (in line 58) the color to reflect.

What’s happening here? Well, the distortion effect of the reflection is achieved not by reflecting the color that corresponds to a specific point but by reflecting a color from a nearby point. What we will do is traverse the noise image. This image has colors ranging from black to pure white; that is, from a value of 0 to 1 (simultaneously in its three channels). For each UV coordinate we want to render for the water rectangle, we’ll sample an equivalent point in the noise image, and the amount of white in that point will be used to offset the sampling of the color to reflect by an equivalent amount in its X and Y coordinates. Since the noise image does not have abrupt changes between black and white, the result is that the offsets will change gradually as we traverse the UV coordinates, which will cause the resulting distortion to change gradually as well.

Let’s review the code for the get_wave_offset() method to better understand the above:

|

| Code for the get_wave_offset() method |

The method receives the UV coordinate being rendered and the number of seconds that have passed since the game started as parameters.

Line 42 of the method relates to the wave_threshold parameter we discussed earlier. When rendering the water rectangle from its top edge, we don’t want the distortion to act with full force from that very edge because it could generate noticeable aberrations. Imagine, for example, a person standing at the water’s edge. If the distortion acted with full force from the top edge of the water, the reflection of the person’s feet would appear disconnected from the feet, which would look unnatural. So, line 42 ensures that the distortion gradually increases from 0 until UV.y reaches the wave_threshold, after which the strength remains at 1.

Line 43 samples the noise image using a call to the texture() method. If we were to simply sample using the UV coordinate alone, without considering time or speed, the result would be a reflection with a static distorted image. Let’s assume for a moment that this was the case, and we didn’t take time or speed into account. What would happen is that for each UV coordinate, we would always sample the same point in the noise image, and therefore always get the same distortion vector (for that point). However, by adding time, the sampling point in the noise image changes every time the same UV coordinate is rendered, causing the distortion image to vary over time. With that in mind, the multiplicative factors are easy to understand: wave_speed causes the noise image sampling to change faster, which speeds up the changes in the distorted image; multiplying the resulting distortion vector by wave_strength_by_distance reduces the distortion near the top edge (as we explained earlier); and multiplying by wave_strength increases the amplitude of the distortion by scaling the vector returned by the method.

And that’s it—the vector returned by get_wave_offset() is then used in line 58 of the fragment() method to offset the sampling of the point being reflected in the water. The effect would be like the lower part of this figure: